Adversarial Robustness Against The Union Of Multiple Perturbation Models - How Is It Used In AI?

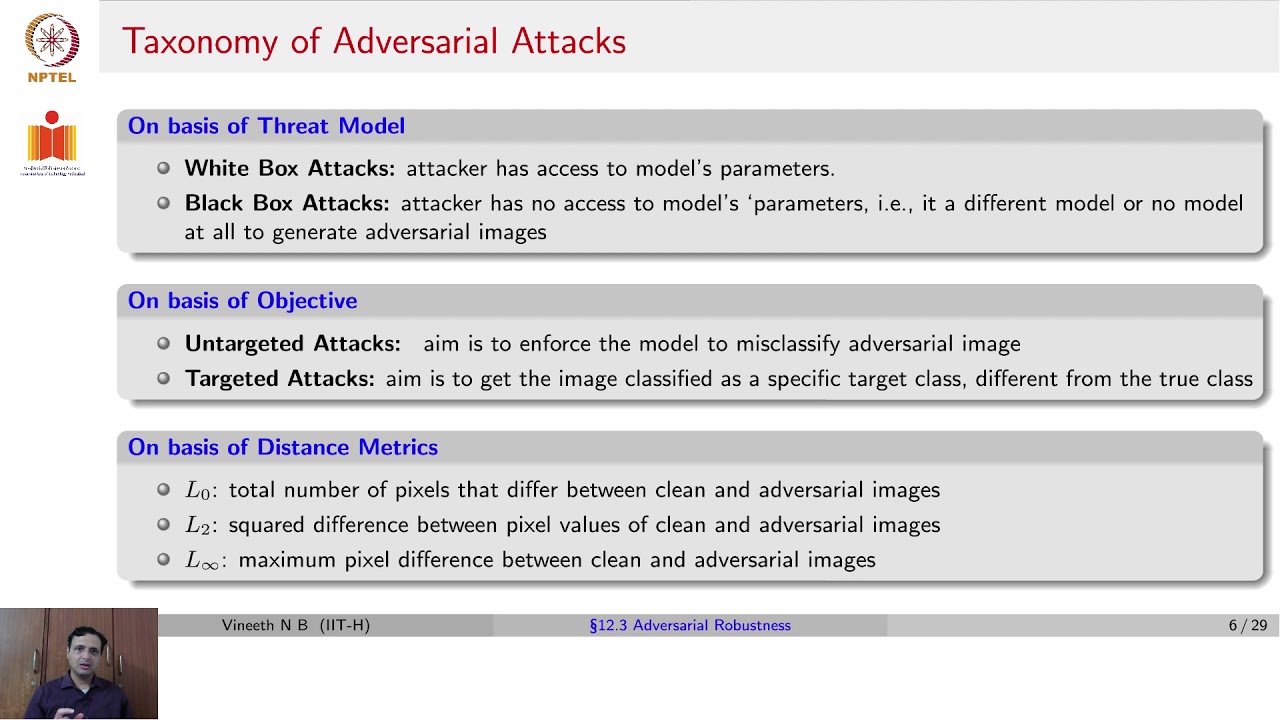

A lot of effort has been put into creating (empirically and certifiably) robust classifiers since deep learning systems are vulnerable to adversarial assaults. While most research has focused on protecting against a single attack type, several new studies have investigated adversarial robustness against the union of multiple perturbation models. However, it may be challenging to adjust these approaches, and they can easily lead to uneven degrees of resilience to specific perturbation models, leading to a suboptimal worst-case loss across the union.

Author:Suleman ShahReviewer:Han JuNov 06, 202234 Shares539 Views

A lot of effort has been put into creating (empirically and certifiably) robust classifiers since deep learning systems are vulnerable to adversarial assaults. While most research has focused on protecting against a single attack type, several new studies have investigated adversarial robustness against the union of multiple perturbation models.

However, it may be challenging to adjust these approaches, and they can easily lead to uneven degrees of resilience to specific perturbation models, leading to a suboptimal worst-case loss across the union.

A logical modification of the traditional PGD-based technique is provided to combine several perturbation models into a single assault, assuming the worst-case scenario across all steepest descent directions. The best-case performance across the union is minimized.

Classical Adversarial Training Frameworks

Recent developments in adversarial training-based defenses have not yet rendered deep neural networks immune to adversarial assaults of a kind other than the perturbation type against which they have been taught to be resilient. A two-stage pipeline is used to increase resistance to a wide variety of disturbances.

When defending against a combined L1, L2, and assault, Protector surpasses previous adversarial training-based defenses by more than 5%. There is an inherent conflict between attacks on the top-level perturbation classifier and those on the second-level predictors: while strong attacks on the second-level predictors make it easier for the perturbation classifier to predict the adversarial perturbation type, fooling the perturbation classifier requires planting weaker (or less representative) attacks on the second-level predictors.

In this way, the model's overall resilience may be considerably improved by using even a flawed perturbation classifier. When compared to previous methods, Protector improves performance against the combined L1, L2, and L attacks by over 5%. To maximize adversarial accuracy against a certain class of attacks, such as norm-bounded perturbations, traditional adversarial training systems are narrow in scope.

Defenses against the union of several perturbations have been the focus of recent adversarial training expansions, although this advantage comes at the cost of a large (up to 10-fold) increase in training complexity over a single attack.

First, ResNet-50 and ResNet-101 are given a benchmark on ImageNet. Then, the adversarial accuracy of ResNet-18 on CIFAR-10 is improved by Shaped Noise Augmented Processing when it is put up against the union of (l, l2, and l1) perturbations.

Adversarial Robustness

People Also Ask

Are Adversarial Robustness And Common Perturbation Robustness Independent Attributes?

Robustness against adversarial attacks and frequent perturbations are two separate concepts.

What Is Ensemble Adversarial Training?

Training data is enhanced with perturbations borrowed from other models using the method of Ensemble Adversarial Training.

What Does Model Robustness Mean?

The degree to which a model's performance varies while incorporating fresh data vs training data is referred to as model resilience.

Final Words

Adversarial robustness against the union of multiple perturbation models has the benefit of immediately convergent upon a trade-off between several perturbation models. By taking this approach, we are able to train standard architectures that are resilient against l, l2, and l1 attacks simultaneously, outperforming prior approaches on the MNIST and CIFAR10 datasets and improving upon adversarial accuracy of 40.6% on the latter by achieving 47.0% against the union of (l, l2, l1) perturbations with radius = (0.03, 0.5, 12).

Suleman Shah

Author

Suleman Shah is a researcher and freelance writer. As a researcher, he has worked with MNS University of Agriculture, Multan (Pakistan) and Texas A & M University (USA). He regularly writes science articles and blogs for science news website immersse.com and open access publishers OA Publishing London and Scientific Times. He loves to keep himself updated on scientific developments and convert these developments into everyday language to update the readers about the developments in the scientific era. His primary research focus is Plant sciences, and he contributed to this field by publishing his research in scientific journals and presenting his work at many Conferences.

Shah graduated from the University of Agriculture Faisalabad (Pakistan) and started his professional carrier with Jaffer Agro Services and later with the Agriculture Department of the Government of Pakistan. His research interest compelled and attracted him to proceed with his carrier in Plant sciences research. So, he started his Ph.D. in Soil Science at MNS University of Agriculture Multan (Pakistan). Later, he started working as a visiting scholar with Texas A&M University (USA).

Shah’s experience with big Open Excess publishers like Springers, Frontiers, MDPI, etc., testified to his belief in Open Access as a barrier-removing mechanism between researchers and the readers of their research. Shah believes that Open Access is revolutionizing the publication process and benefitting research in all fields.

Han Ju

Reviewer

Hello! I'm Han Ju, the heart behind World Wide Journals. My life is a unique tapestry woven from the threads of news, spirituality, and science, enriched by melodies from my guitar. Raised amidst tales of the ancient and the arcane, I developed a keen eye for the stories that truly matter. Through my work, I seek to bridge the seen with the unseen, marrying the rigor of science with the depth of spirituality.

Each article at World Wide Journals is a piece of this ongoing quest, blending analysis with personal reflection. Whether exploring quantum frontiers or strumming chords under the stars, my aim is to inspire and provoke thought, inviting you into a world where every discovery is a note in the grand symphony of existence.

Welcome aboard this journey of insight and exploration, where curiosity leads and music guides.

Latest Articles

Popular Articles